Linking an Oracle RAC source database using a standalone host environment

The standard way to link an Oracle RAC database as a dSource in the Delphix workflow is to provide the name or IP of one of the cluster nodes and then discovery will find the other cluster members, listeners, and databases. This is done by adding an 'Oracle Cluster' type Environment in the Add Environment wizard. The cluster nodes will be added to the Environment using the hostname/IP returned by the 'olsnodes' command and in most cases, this will be sufficient. There may, however, be cases when this is undesirable, such as wanting to run snapsync/logsync traffic over a second network. Using an 'Oracle Cluster' type Environment currently stores a single IP for each cluster node and this IP will be the one associated with the node name returned by the 'olsnodes' command. Using a Standalone Environment is one way to work around this situation by defining the Environment based on the IP on the network snapsync/logsync should run over. The Delphix Engine should have a NIC connected to this network also. When both Delphix Engine and source servers have NICs on this second network there is no need for static routes to be configured on the Delphix Engine or the source server.

Ensure that OS and DB users for Delphix have been configured and meet requirements. Create the OS user on each node using the exact same settings. Always use the hostchecker to verify the source and target environments are ready for use with Delphix.

Even though a single node will be used for linking, it may be desirable to unlink and relink the source to a different node in the future, so make sure the Delphix OS account is configured identically on all nodes.Create a toolkit directory in the same location on each node and ensure permissions are set to 0770.

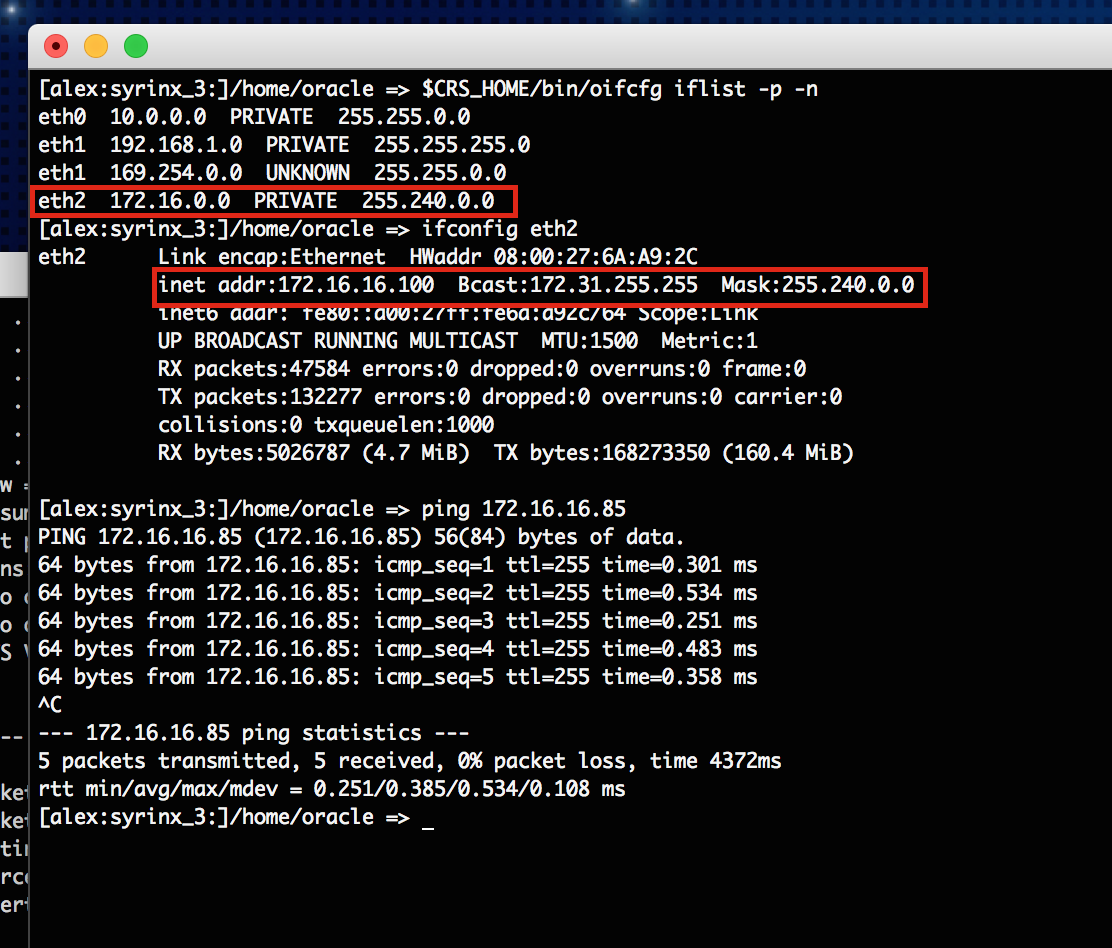

Identify the local IP address on the second network of the node which will be used for linking. Use the

'oifcfg' and'ifconfig' commands to find this information. Then verify by pinging the Delphix Engine using it's IP on the second network. Don't worry about the output from'oifcfg'with respect toPUBLIC,PRIVATE or UNKNOWNvalues for the interfaces. These are not actual CRS usage, but rather based on the IP address range (RFC1918) - in the image they all show asPRIVATE.

Add a 'Standalone Host' type environment using the chosen cluster node's second network IP.

When discovery is finished, the Oracle homes on the cluster node should be discovered along with the listeners that run on that node. Once that is done add the database that will eventually be a dSource to by providing the db_unique_name, db_name, and the instance name that runs on the node that was added.

Then add a jdbc connect string using the node VIP and a service name.

Now click on the add dSource link. Follow the steps in Adding a dSource making sure to set the Snapshot controlfile location in RMAN to a location that's visible to all cluster nodes, or snapsync will fail. An ASM diskgroup is a suitable location. Even if the Snapshot controlfile is not located in a shared location, it is still possible to link the source - the first snapsync will fail with a fault that relates to the Snapshot controlfile location, but subsequent Snapsync operations should succeed.

JDBC connections will go over the public network and snapsync / logsync / environment monitor traffic will go over the second network.